This is the fifth in a series of posts (first, next, previous) in which I am exploring five key technology themes which will shape our work in the coming decade:

- The Emergence of the Individual Narrative;

- The Increasing Perfection of Information;

- The Primacy of Decision Contexts;

- The Realization of Rapid Solution Development;

- The Right-Sizing of Information Tools.

At Last, the Realization of Rapid Application Development

The history of software development may be read from one perspective as a continual increase in efficiency, both in terms of the individual programmer, programming teams, and the extended IT team.

A confluence of recent technological advances has combined to rapidly accelerate the speed at which software solutions can be delivered.

The individual programmer has moved to working at a higher and higher level of abstraction over time. The programming team’s tools and processes have improved the coordination of work.

High Level Languages

A simple example of this is in the evolution of computer languages. Each computer only ‘understands’ one language, the machine language for that particular processer, also known as ‘object code’ or ‘op codes’.

It is very difficult to work directly in object code, where you are telling the computer step by step with numbers only what to do. So we don’t. Instead, the lowest level programming is done in a language called ‘assembly’ where there is essentially a 1-1 mapping from the language instruction ‘mnemonics’ to a particular machine language instruction. A program called an ‘assembler’ converts the assembly instructions into opcodes.

So instead of directly writing the opcodes

B8 02 00 00 00

which moves the integer value 2 into the EAX register on the processor (don’t ask), in x86 (Intel) assembler the mnemonic version would be

MOV EAX,2

But it still takes forever to get anything done when you have to tell the processor what to do one thing at a time.

Then in 1952 Grace Hopper, a seminal figure in the software industry, created the first ‘compiler’, a program which could translate a program written in a ‘higher level’ language – a language more intelligible to humans – into object code, the language intelligible to machines.

In the late 50s and early 60s a synergy developed between the development of computer languages and the work in generative grammars by Noam Chomsky and others. Higher and higher level languages and the tools needed to use them emerged. And the productivity of individual programmers soared.

A simple program that prints out “Hello World” is, since the publication of the first books on the C language, frequently the first program learned in any language and the first program used to test the underlying function of a new language.

Here is what ‘Hello world’ looks like in three successively higher level languages: x86 assembler (for Linux), C, and Python.

Hello World x86 ASM:

section .text

global _start

_start:

mov edx,len

mov ecx,msg

mov ebx,1

mov eax,4

int 0x80

mov eax,1

int 0x80

section .data

msg db 'Hello, world!',0xa

len equ $ - msg

Hello World in C:

#include <stdio.h>

int main()

{

printf("Hello world\n");

return 0;

}

Hello World in Python:

print "Hello world"

As applications became more complex programs called ‘linkers’ were created to take multiple object code files generated by compilers and put them together into a single executable file.

This made it easier for more than one programmer to work on a single application. Each programmer could write code, and the discretely created object files could then be linked together into a single executable.

‘Libraries’ of precompiled object files began to be sold by third parties to provide ready-built functionality that could be included into an application. Some of the new high-level languages depended on the presence of these libraries – in the C program above the line “#include <stdio.h>” includes the references to a standard C library needed so the program knows how to call the functions in those standard objects – in this case the function ‘printf’ to print the text out to the command line. Other libraries contained specialized functions for math, or working with databases.

These large libraries of composable functionality enabled the rapid assembly of sophisticated solutions using code that had been written and tested by others that a programmer could readily integrate into their own programs.

So now a single executable created by an individual or team could represent person decades of programming. Large, sophisticated applications could be created in reasonable time frames.

In R, a relatively new language used for statistical analysis, you can load a library, load data, perform sophisticated analysis, and graph the results in only a dozen lines of code[1].

The downside of using libraries of precompiled object files is that if you run into problems, if there are bugs, getting the bugs communicated and demonstrated to the vendor and getting them repaired can add long cycles to development.

The ‘open source’ movement in software is largely about making the source code of library routines available at no cost to programmers, and about the concomitant emergence of communities of programmers to create and maintain their code bases.

The Advent of Networking

An executing program runs in what is called a ‘process space’ representing the memory accessible by the processor executing the program[2].

As it became common for computers to be networked together – networking started in the late 50s, boomed in the 70s with the invention of ‘Ethernet’ technology, but really emerged into the mainstream in the 90s – instead of simply sharing data, driven by the desire to use the collective power of the networked computers we began distributing applications across networks.

This was effected by means of a technique called variously ‘Remote Procedure Calls (RPC)’, ‘Remote Procedure Invocation (RPI)’ or ‘Remote Method Invocation (RMI).” I will call them collectively ‘RPC’ or ‘Remote Procedure Calls’ from here on out for convenience.

Essentially, instead of adding the library into your local program, the library executes on a different computer across the network, and your program calls its functionality at runtime.

As I described above, an executing program runs in a single process space. With some sophisticated networking wizardry behind the scenes RPC makes procedures or functions on other computers look to a running application as if they are on the local machine in the current process space.

Common remote calling protocols (from older to newer) include CORBA, DCOM, and Java RMI.

Services and APIs

But now, with the emergence of the Internet, a new form of composable software functionality has emerged: services.

Conceptually services are simply new standardized types of Remote Procedure Calls. This is the single most important thing to understand about services. As the reach, reliability and speed of the Internet all increased it became an attractive network for application integration. So Internet-capable Remote Procedure Call standards emerged.

There are two common standards. The subterfuge of making the procedures appear to execute in the same process space as the caller has been abandoned. Both service standards typically use the same Internet protocol as web sites, so they have the additional advantage of not needing any special network setup to use – older RPC methods needed special firewall configuration (a firewall is a network security device that controls what traffic can pass through), but firewalls are already set up to pass web traffic.

And they both use (mostly) text-based (as opposed to binary) data standards, which are also web-friendlier as well as being human readable and the flavor of the month.

The older of the two web service standards is called ‘Web Services’. Web Services use a remote procedure call standard called the Simple Object Access Protocol, or SOAP[3]. It uses XML as its data standard.

But as Web services, which are very simple to use in their simplest form[4], had to deal with real world issues such as security, they got wrapped around the complexity axle. The WS-* (usually pronounced ‘W-S-Splat’) specifications (such as WS-PolicyAssertions and WS-Trust and WS-Federation Passive Requestor Profile and on and on and on) became myriad and labyrinthine and daunting to learn and use.

The newer standard, whose adoption was largely a backlash from the complexity of using Web services, is called REST, which stands for Representational State Transfer. REST is even closer to the language of the World Wide Web, using standard web page commands POST, GET, PUT and DELETE against standard data objects for the functions Create, Read, Update, and Delete. REST is wrestling with the same challenges that have made Web services problematic. We will see how it evolves.

A set of services with a common theme that is managed as a group is called an API. API stands for ‘Application Programming Interface’ – many or all of the services in a single API would typically be called by a given client application using them.[5]

As the use of APIs became commonplace special purpose platforms for hosting and managing APIs appeared which offer built in security capabilities, monitoring and so on , such as Apigee and Mashery, gobbled up by Google and TIBCO respectively, both mature products which have been in production for years. Amazon released their API platform, API Gateway, in early July 2015. Kong is the biggest open source platform.

APIs are growing like wildfire and are revolutionizing the development landscape. The popular ProgrammableWeb site tracks the growth of APIs. Here is their chart which shows the growth of APIs through 2016:

Now, with the advent of APIs, the scope of individual applications is no longer limited to the code a development team can write, the libraries they can buy or download and link into their applications, and the third party tools they can install on their local networks and make remote procedure calls into.

Any published API on the Internet they have rights to access – and many of them are free – can now be part of their application.

So nowadays if you want to make an application that includes sophisticated mapping, for example, you don’t write a mapping routing, or buy a mapping library. You just call the Google Maps API. What used to take months, or weeks, and thousands or tens of thousands of dollars now takes ten minutes and is free.

The advent of APIs is a game changer. The combination of REST APIs, highly productive new languages and frameworks for making web applications such as JavaScript and Angular.JS, and readily accessible open source libraries built around new standards, has created a quantum leap in productivity.

A single programmer who knows the new ‘stack’ can develop solutions faster than a team of programmers using earlier tech.

Model Driven Architecture

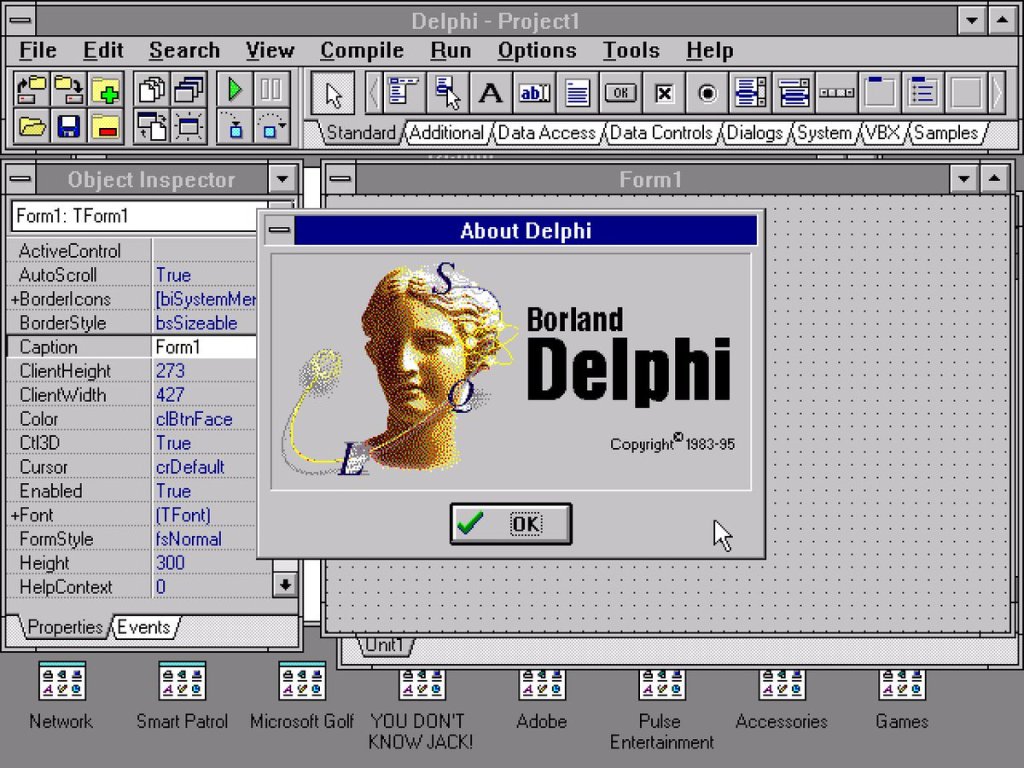

Software application interfaces became graphical (GUI – Graphical User Interface) in the early 1990s[6].

Application ‘frameworks’ also appeared, essentially pre-written code for managing the most common parts of GUI applications and ‘plugging in’ new code.

Programmer productivity soared. Visual Basic-like Rapid Application Development (RAD) tools emerged for other computer languages, including new languages created for use in such environments.

A new style of programming, with its own languages, emerged in the 1990s and came to dominate the development landscape: object-oriented programming.

Software modeling, describing software with diagrams in graphical languages such as the Unified Modeling Language, emerged in a symbiotic relationship with object-oriented programming: OO programs lent themselves to being graphically modeled.

New tools emerged which could generate most of an application from a set of models[7].

At the most sophisticated level, so called ‘round trip engineering’ tools enabled you to make changes in the code or the model and keep them in sync.

But, for a number of reasons, the initial promise of model-driven development wasn’t realized.

First, because formal Object-Oriented design techniques and the use of large application development tool suites got wrapped up with formal enterprise software development methodologies. Even though some of them were intended to be iterative, the approaches, such as the Rational Unified Process, or RUP, were perceived as heavyweight and essentially waterfall.

The second reason that model-driven development’s promise was not realized is that the tools were not sophisticated enough. They did an ok job with user interfaces. But the user rapidly hit a point of diminishing returns. The hard parts of writing a program are not the parts that early model-driven code-generators could generate, the UI and the data model. The hard parts are the complex business rules, the algorithmic parts.

The early failure of model-driven tools was partly a function of the limitations of the most successful modeling approach of the 1990s, the convergence of three discrete modeling approaches into something called the Unified Modeling Language or UML. UML did not address business rules. A formal rule language called the Object Constraint Language or OCL, which came out of IBM, was widely adopted as a bolt-on to UML to describe rules, eventually becoming part of the standard, but the architecture tools’ struggles with business rules had already made them fall from favor.

Early RAD tools such as VisualBasic and Delphi could already do most of what a model-driven architecture tool could do, and with much lower overhead and fewer restrictions.

RAD tools featured key model-driven architecture functionality, such as the ability to generate UI screens populated with widgets like combo boxes and buttons and skeleton code for handling user actions, and the ability to write the (SQL DDL) code to build a database structure from a model.

Even desktop productivity apps such as Microsoft Access got the ability to generate the framework for simple applications the user could finish with Visual Basic for Applications code.

The final reason is the nature of such tools and their cultural fit. Model-driven architecture deals with high level abstractions. Abstract, theoretical computer science has always been more popular in Europe than in North America. America favors algorithms and process over models[8].

Despite the increasing ‘smallness’ of the world software community driven both by the Internet as well as the growing multiculturalism of software development, the distinction still remains.

I believe the greater adoption of model-driven architecture tools is inevitable, but not for client applications.

First, because as I described above, data about real things in the world such as consumers is _always_ a model of reality, we are necessarily doing model-based development. We need to embrace it. We are engineering information, a realm where abstraction is powerful.

But mostly the greater adoption of model-based architecture will come because such tools are productivity multipliers, engineers are expensive, and money will inevitably, eventually, eternally out.

But model-driven architecture will not prevail in the client-facing application space. In the coming discussion of the theme ‘The Right-Sizing of Information Tools’ I will demonstrate how large, monolithic applications such as Facets or Salesforce are giving way to tiny dedicated-purpose applications which share data and function via services.

There is a current trend in development to return to command-line tools. That trend is divisive – many in the community of web app development who do not have lower level programming backgrounds are feeling disenfranchised. But the simple truth is it has become the most efficient path to developing small web apps to write code and scripts.

The True Value of the Cloud

And now, finally, enter The Cloud[9].

Let’s do a thought experiment. Imagine you have a particular database and related tools – let’s say the currently popular Hadoop ‘ecosystem’ – HDFS, Yarn, HBase, etc. – on a server in the Cloud and on a server in your own datacenter.

The answer is none, zip, nada. Exactly the same functionality.

The true value of the Cloud is in the lower time and cost it takes to get a new solution up and running, or to scale an existing solution. There appears to be a functional difference because the Cloud is full of new and sexy technologies, most of which you do not have in your data center.

The spin-up cost and the tear-down cost in the Cloud is so low it becomes cost-effective to try new solution approaches.

But mostly it becomes linearly faster (‘exponentially faster’ would be hyperbolic[10]) to get started on the programming and configuration parts.

The overhead of getting approval for a few hundred thousand dollars’ worth of servers and network interface cards and whatnot, ordering the hardware, installing the hardware, setting up the hardware, installing the operating system and software containers (JBoss, WebSphere and suchlike), setting up firewall rules, and so on goes away in favor of getting approval for a relatively modest initial outlay and a modest monthly usage bill.

If the solution has to scale, instead of ordering more hardware and jumping through all the hoops again, you call up the web app controlling your Cloud account and pull a slider over to the new capacity you need. Done.

And as I said, the Cloud is full of new and sexy tech – which is all based on modern standards of interoperability.

This becomes a ‘force multiplier’. Because the new infrastructure is not just your users using a Cloud app such as Salesforce. It is not just your internal data needs being sourced from some Cloud-based ‘data lake’.

It is a constellation of Cloud-based tech all working together to provide comprehensive solutions – a Cloud-based database integrated via a Cloud-based integrator with a Cloud-hosted app accessing Cloud-based APIs.

For software architects and engineers versed in the new technologies, it is a solution smorgasbord.

But it is important to figuring out your path to adoption to understand that the Cloud is not just one kind of thing.

When we say ‘Everything-as-a-Service’ we risk creating a confusing conceptual unity where it does not exist, except in some very broad sense. Having ‘Service’ as part of the name also creates a certain amount of confusion vis a vis REST and SOAP services. While a given ‘as-a-Service’ offering may leverage REST or SOAP services in its implementation, the word ‘Service’ in ‘as-a-Service’ does not mean the same thing as the ‘Service’ in ‘Service-Oriented-Architecture’, ‘Web Service’ or ‘RESTful Service’.

We can categorize Cloud solutions into members of the ‘as a Service’ (aaS) groups:

- Infrastructure-as-a-Service (IaaS)

- Platform-as-a-Service (PaaS)

- Data-as-a-Service (DaaS)

- Software-as-a-Service (SaaS)

‘Everything-as-a-Service’ simply means taking advantage of all of them as appropriate.

Infrastructure-as-a-Service provides the client physical or virtual servers and the resources (such as operating systems, load balancers, network switches, etc.) needed to support them.

Mature IaaS solutions such as the Amazon Elastic Compute Cloud (EC2) (part of the Amazon Web Services Cloud computing platform) dominate the IaaS market.

Platform-as-a-Service builds on IaaS to provide, typically, in addition to the servers, a programming language execution environment, database, and web server.

The classic example is called ‘LAMP’, a server with the Linux operating system, Apache web server, MySQL database, and PHP programming language –typically used to create web applications.

A sexier current example is the JavaScript-based ‘MEAN’ stack: MondoDB, Express.JS, Angular.JS and Node.JS.

Data-as-a-Service puts data, usually single-purpose data such as analytics data, into the Cloud behind a standard services-based interface.

Software-as-a-Service provides subscriptions to centralized Cloud-based software applications such as Salesforce.com.

The farther you move down the As-A-Service stack from infrastructure to software, the greater the gain in time-to-market for your solutions.

‘Righter’ the First Time

In the late 90’s in response to traditional heavy weight development methodologies that followed a so-called ‘waterfall’ approach, which were seen as a root cause of software project failure, a new development methodology called Agile emerged.

Instead of a heavy up front object oriented analysis and design phase and the creation of complex Use Cases, Agile consisted of small teams using lightweight requirements discussion documents called ‘user stories’. Concurrently a number of small, lightweight scripting languages such as Ruby and Python emerged, which enabled productive development in a return to essentially a command-line world.

At the same time how and when testing happened changed. We moved from writing code, then testing it, to writing the tests up front.

In a manner similar to the changing health care focus from fixing illness to maintaining healthiness, current best practice – called Continuous Integration or CI – assumes a complete build that passes all its unit and integration tests as the baseline state of a component or application.

Agile in conjunction with a test-forward approach and CI enables agile teams to get it ‘righter’ the first time. (Although I believe Agile has hit the shoals in the back office, and that we need a reset to get it even ‘righter’. I speak to that in detail in this post from my series on the CONQUER distributed solutions architecture.)

With more powerful hardware, virtualization has blossomed. In its latest incarnation, we are not virtualizing entire computers – although we are doing that too – we are virtualizing something ‘lighter’ – the operating system, software, libraries, config files, etc. needed to execute a particular application. These ‘containers’ (mostly Docker) can be deployed and managed as units, much like the standardized containers used worldwide in shipping. An entire ecosystem of technology has grown up around their care and feeding (mostly Kubernetes).

Recent estimates have 12% of IT leaders having adopted containers, and 47% plan to.

Standard Oil

There are many benefits of the emergence of integration standards, some not as obvious as others. The adoption of such standards fosters the reuse of code written to work within the standards. They act as standard oil to facilitate the growth of rapid and model driven tools and libraries that can safely assume the standards.

New standards let experts do the hard bits, making the rest easier and simpler. Consider this. If you have a very complex piece of code, you put it behind a service. Your expert who understands all that complexity creates and maintains the capability and creates a simple service interface for other developers to consume. You don’t need a dozen experts, or a single expert on someone else’s team, bottlenecking your project.

Over time this allows you to optimize your expensive software human resources. You have a handful of seasoned experts creating services, and a host of young hungry developers well versed in the current scripting/web app technology stack consuming those services in client applications. You can have – you must have – a heterogeneous set of client tools. That space evolves so quickly now it becomes stifling to the enterprise to try to stick on one standard for a decade.

Vendor Lock In? Abstraction to the Rescue?

You have heard about multi-cloud strategies, sometimes meaning using two or more of the big public clouds, AWS (Amazon’s) _and_ GCP (Google’s) _and Azure (Microsoft’s), sometimes meaning managing your data center as a private cloud along with one or more public or proprietary clouds.

The truth is, we are all getting locked in to particular public clouds more tightly than any enterprise ever got locked in to IBM or Digital.

Having a rich palette of interoperable TinkerToys(r) in a particular public cloud, widgets that span all levels in the -As-a-Service stack, is a powerful, practically irresistible siren song.

Trying to adopt a portable technology tool solution, say Kafka, intending to deploy it across some future set of clouds, inevitably raises the question, why not just use X, instead, say Kinesis, or DataFlow.

An obvious alternative would be to use GCP’s data flow for streaming functionality needed by apps on AWS. Which runs us headlong into a discussion about distributing workloads, and the nature of doing fine-grained integration (versus business-level service integration) ‘locally’ versus ‘distributedly’. And we start debating about the 60 gig backplane speeds for local integration on AWS versus the throughput we might get going over the Internet and through the woods to Azure’s house we go.

Eventually integration pipes will be big enough, and reliable enough, for fine-grained distribution of functionality. (Had this same conversation in 1989, 1999, and 2009 : ).

Until then, ‘multi-cloud’ largely means the establishment of coarse-grained interoperability across clouds.

But we are talking about software, about information tools. And information tools can work at different meaningful levels of abstraction.

We are seeing the emergence of a new generation of multi-cloud tools that take a different approach: design solutions at a level of abstraction that can be projected or mapped into many different clouds, public and private.

Model-driven architecture is having a resurgence in so-called ‘low-coding’ tools, a new generation of business productivity tools that enable high skilled business people/low skilled IT people to readily create and deploy distributed applications.

More about that in the next post in this series on the five key technology themes transforming the 2020’s: the right-sizing of information tools.

Stay tuned.

[1] An R application I wrote five or six years ago as part of a proof-of-concept for custom market segmentation for our Medicare business was literally 15 working lines long – 48 lines with comments and spaces.

[2] Yes, techno-savvy reader, it’s a lot more complicated than that, with virtual address spaces mapped into physical memory via page tables, etc. But this will do for now.

[3] The SOAP protocol can actually use transport layers other than the Web’s HTTP, such as JMS or even SMTP. Explaining how all that works is beyond my scope here. If you are eager to pursue it, gentle reader, here is a good starting point, a nice fat PDF file on the standard Open Systems Interconnection seven layer computer networking model.

[4] One of the reasons for the success of the Web is that web pages can be very simple – and even have errors – and still work – there is a low skill barrier to entry. And on that initial capability tier one can get a lot done. But there are also higher, more expert tiers you can climb to, such as using Cascading Style Sheets, and so on, whose capabilities will reward your training and study investment by opening new broad tiers. XML is the same way. There is an initial tier that requires a level of rigor higher than Web pages per se. But once there you can do a lot. If you want to climb higher you can pursue formal data models called schemas (XSD’s), functional programming widgets to translate XML into something else, including just different XML, called transforms (XLST’s), and so on. The Web Service standard tried to follow the same approach, consciously or not.

[5] The terminology is loose and can get confusing: ‘API’ can refer to a collection of Web or REST services, or a particular Web or REST service, or a public stand-in (proxy) for a private service, or for a function or the set of functions exposed by a runtime component via RPC, such as the OLE API for Excel.

[6] The rapid adoption of GUIs was driven by a ‘perfect storm’:

- the widespread adoption of Windows 3;

- the end of the 5 year depreciation period for the 80286-based computers corporations had purchased in the 1980s, enabling them to buy new 80386-based computers, which supported in hardware Windows 3’s ability to run multiple programs concurrently and ‘task switch’ among them;

- and the emergence of Rapid Application Development tools starting with Visual Basic in 1991, software which enabled programmers to rapidly develop the graphical parts of their applications.

[7] I was the architect and development lead on several parts of a model-driven development tool in Silicon Valley back in the day so have first-hand knowledges of the challenges with them. The technology I helped develop survived the DotCom crash – it was used at a company called GlobalWare Solutions to generate custom web solutions until just a couple of years ago.

[8] Communications of the ACM, August 2015, Vol. 58 number 8 page 5 Editor’s Letter, Moshe Y. Vardi, ‘Why doesn’t ACM have a SIG for theoretical computer science’

[9] I hear that in Vivian Stanshall’s voice from Mike Oldfield’s seminal album of the early 70’s ‘Tubular Bells.’ For the younger among you, that was the theme song in ‘The Exorcist’. For the even younger among you, never mind, go play World of Warcraft.

[10] Sorry, geek joke, occupational hazard.