This is the seventh in a series of posts (first, next, previous) in which I am exploring five key technology themes which will shape our work in the coming decade.

The Operational Business Impacts of our Technology Themes

Having analyzed our five technology themes, let’s look at how the technology tapestry we’ve woven from those interrelated threads will shape, influence, control and inform our business operations – the day over day.

Here is a recap of the five themes:

The Emergence of the Individual Narrative

Consumers’ life journeys become the data heart of operational and analytic systems.

The Increasing Perfection of Information

Complete, accurate data presented in meaningful contexts is available on demand where and when it is needed.

The Primacy of Decision Contexts

Business processes evolve into the automated integration of expert decisions, which themselves will be increasingly automated. Health decisions by consumers and caregivers alike will be optimized with rich contexts of knowledge, recommended actions, and insights.

The Realization of Rapid Solution Development

New and improved information tools are delivered, adopted, and retired at a rapid cadence.

The Right-Sizing of Information Tools

Monolithic applications give way to business palettes of discrete, targeted tools integrated and orchestrated across a common backbone of services and events.

Because of the interwoven nature of the technology themes, there was quite a bit of redundancy and cross-reference in their exposition. The same thing will occur here as I discuss their business impacts, which I trust you will also forgive.

A Common Tongue

As I described earlier, an ontology is an abstract data model and closely-associated business rules in part derived from but created independently from the database and application data models used by the business.

Part and parcel of creating an orchestration of solutions is having an ontology in the integration space, a Canonical Model, a lingua franca. Just as the original Mediterranean lingua franca enabled sea-based trade for over 900 years across cultures with different languages, a comprehensive health solutions ontology will enable the semantically consistent integration of internal and external solutions.

This is vital and necessary work to achieve our objective this decade. The orchestration of function is expanding beyond your four walls. The same approach is the key to success in interoperability at all levels. The ten-year federal interoperability roadmap from the ONC HIT does not go far enough in spelling this out. But it does emphasize that we “will need to converge and agree on the use of shared and complementary format and vocabulary standards to satisfy specific interoperability needs. The use of multiple, divergent information formats over the long term is unsustainable and perpetuates systemic roadblocks and expenses that could otherwise be removed for technology developers, providers and individuals.”

In practice, having a single massive ontology is difficult to achieve. Instead, an ontology for each discrete domain, such as clinical practice, can be created and maintained, with a shared ontology for common concepts which span domains.

Your APIs should be implemented with service interfaces that exchange canonical elements.

Because a consistent clinical vocabulary is such an important part of such an ontology, and because it is such a vital part of the distribution of health care services we are driving, we need to build it out as quickly as possible.

FHIR has emerged as the leading current standard in interoperability. It has, and will have, issues. But in the same way that HIPAA EDI transactions for claims and eligibility have succeeded despite 4010 being dated out of the gate, FHIR will succeed too.

To be crystal clear: if you do not immediately make a full court press toward the deployment, adoption and support of the new standard stack of integration technologies you fail.

Federation – Your Five Year Mission (yes, that’s a Star Trek joke)

In the 2020’s future of health solution choreography and orchestration, business functions as well as ‘backplane’ functions such as identity management, security, and privacy will need to be delivered by APIs.

That is all well and good.

But your practical challenge over the next five or ten years is that your current infrastructure consists primarily of those large ‘monolithic’ applications such as Facets and Salesforce. And I don’t see you ripping it out and replacing those anytime soon.

How do you integrate those into the target 2020 environment?

The answer is ‘federation’.

Rather than a single conductor conducting the strings, woodwinds and percussion of an orchestra of services, the conductor will conduct a combination of existing ensembles – our monolithic apps – and discrete services – the individual players of tiny cohesive apps and services.

It will have to be as if the benefit song ‘We Are The World’ had not just been Lionel Richie, Stevie Wonder, Billy Joel, Bob Dylan, Ray Charles and 16 other individual music stars singing a single song, but also featured the Rolling Stones, U2, and Aerosmith, each band doing their own version, all at the same time, making it all sound good together.

Here is a more specific example. If we you going to succeed in the new consumer orientation, you need to enable continuity and intimacy across all channels in your consumer communications. If a customer calls in, and has to get transferred, the person who picks up the call needs to know who it is, what they are calling about, who they just talked to and what was discussed.

The problem is that currently that information is recorded in the workflow systems of different applications. And the customer identity is recorded in different operational systems in many departments.

The solution is for you to federate those workflow systems together, so they are effectively a single enterprise workflow system, to federate consumer identity together to ‘track’ each individual consumer across systems.

We can extend that idea to operations in general, not just workflow. How do you operationally integrate monolithic applications such as Facets with the evolving host of small ‘best of breed’ point solutions?

Federation is the model for success. But at what grain, at what level? It is impractical to expose and integrate every function.

There are a number of keys to successful federation. Let’s use an example close to hand. We can use our own U.S. government system, our federation of states as defined in the U.S. Constitution, as our guide.

First, federal control needs to be limited to a finite number of clear functions. Those should include (with Constitutional references in parentheses):

- Overall Costs and Benefits (‘general welfare’)

- Interactions with External Parties (‘common defense, declare war, make rules of war’, ‘no state shall enter any Treaty, Alliance or Confederation’)

- Financials and Audits (‘tax, borrow, pay debt’)

- Data and Integration Standards (‘weights and measures’)

- Integration (‘interstate commerce’, ‘post offices and roads’)

Second, where there is a conflict, federal regulation trumps local regulation (The Constitution, federal laws, and treaties ‘shall be the supreme law of the land’).

The technical path for this is to create what software architects call a ‘layer of abstraction’ surrounding each monolithic application. That layer of abstraction consists of a set of services, an API, through which you do all interactions with the application. You then orchestrate those services as needed.

You combine that with a set of enterprise services, such as workflow services, that provide common functions to your ‘right-sized’ solutions as needed, and a consumer master that links together common identities across operational systems.

Creating those service layers enabling federation will be easier or harder depending on the integration sophistication of the legacy platform.

Even in advance of such large-scale federation you can provide value by having workflow triggers for common customer service tasks available to anyone. If a member needs replacement ID cards, anyone at the company should be able to do it with the push of a button.

Improved OpEx Reduction

A business decision context-based approach in conjunction with rapid development and right-sized solutions will enable you to whittle your operating expenses to the bone.

If you have a person whose primary job is keeping information flowing, for whatever purpose, they will be replaced by automation.

This is of course not a novel idea. The relentless advance of computer automation was the theme of the Tracy & Hepburn social comedy Desk Set in 1957.

So is this just the same old boogie man?

The replacement of human data agent by machine has been relentless. It has just become so commonplace we don’t bother to make movies about it anymore.

But the bar continues to be raised on the kinds of activities that can be automated. With the introduction of rules engines we have blurred the line between having rules embedded in programs that people use to do the activities, to having more autonomous software agents doing activities themselves.

We should perform a business decision context analysis of our existing processes (and ‘meta-processes’ – those processes whose decisions shape our processes).

The initial goal should be to clearly identify the business decision nexuses. That will identify your so-called ‘automation boundaries’ – those parts of our processes that serve only to get information into and trigger a decision, and the parts that carry the decision on toward the next decision point.

Having done that, you should create and execute on an efficiency roadmap to systematically move the automatable parts of the flows to your new information infrastructure using messaging as the primary information conduit. You can prioritize that work in two ways: by automating the most expensive human information flows, and by improving the highest value decisions.

For decisions whose primary context is provided by monolithic applications you will need to lay the groundwork for increased automation by implementing your federation strategy.

For each Business Decision Context you should isolate and clarify the control flow. You need to understand the trigger(s) – the control input – and the guard(s) – the control output.

Having done that, you should identify all the information needed to establish the Decision Context – the information the decision maker needs to have to make the call – and ensure it is all available in an easy-to-apprehend timely fashion.

Then finally you should position each decision on your decision automation timeline.

That timeline ranges from ‘Rote Decision – Automatable with Rules Engine’ to ‘Human Needed with Deep Expertise in X’.

You first need to clearly define what X is – what knowledge and experience a human needs to be well-qualified to make that decision. You need to ensure you have redundant human experts. You also need to ensure you have a talent pipeline in place to keep those roles filled. (One concern going forward is this: in a world of increasing automation how do humans gain the deep knowledge and experience they need without ‘learning the ropes’ making lower-level decisions and moving information around?).

The interesting part of your decision automation timeline lies in between deterministic automation and human expertise. That is the part of the timeline where you might gain an advantage by making good decisions more quickly, more cheaply and more consistently than your competition by the application of advanced data analytics and artificial intelligence.

Operationalizing Analytics

Up to this point most of us have been cherry-picking where to apply analytics. You need to replace that with the systematic identification of critical business decision points – which the process analysis I have been describing will provide.

This will enable you to operationalize analytics. That should be your goal – to have analytics spring organically from your processes.

You may not have the infrastructure in place today to support the widespread operationalization of analytics.

There are three process levels where these advanced analytics can obtain.

Here is the approach needed at these three levels:

At the highest level, the enterprise strategic level, you want to make better decisions on where to make significant investment in order to flourish in the rapidly changing health solutions landscape. You can’t afford to react to such changes. You must anticipate them. Predictive analytics can help you leverage the past to understand the near-term future.

At the next level, the division strategic level, you want to make better decisions to improve your market shares.

At the department tactical level, you want to improve the bottom line by optimizing your processes. Where can you cost-effectively introduce automation? How can you speed up time to market for new products?

As you successfully embed analytics into your culture, the makeup of your business teams will evolve. You can anticipate some of the growing pains that will involve and ameliorate their impact.

The Rise of the Quants

There is a recurring pattern in business. The first scorekeeping of how well business was doing – and still the primary method – is finances. Every company has accounting – partly to keep money flowing, but mostly to keep score.

The familiar pejorative ‘bean counter’ has overtones of parsimony, but it mostly means a person whose primary concern is quantification.

With the emergence of business process engineering and more formal approaches to business process management, we have started creating and using metrics that are not financial, for example the calculation of various customer satisfaction scores.

Most enterprises have a ‘balanced scorecard’ perspective consisting of a number of measures, both financial as well as customer and internal metrics.

Process optimization is being driven from the perspective of improving these metrics. Payers all measure the ‘auto-adjudication rate’ of claims – the percent of well-formed claims that are received and processed through to payment without touching human hands.

As the volumes of data related to processes has grown, more sophisticated analytics approaches have been needed, have been enabled, and have emerged, where by ‘more sophisticated’ I mean ‘statistically-based’.

As you introduce large data and the statistically-based analytics their effective use demands, you need people with different skill sets to do that analysis.

You already have them in Finance. And if you are a payer, because risk is a major aspect of your business, you already have them in Actuarial and in their data support team Healthcare Informatics.

But you don’t have them everywhere yet – and they are coming.

This kind of change – the introduction of formal big data analytics techniques into existing business processes – happened in Wall Street in the 70’s and 80’s. Data scientists with big data skills gained in fields such as physics, genetics, and biochemistry were brought in to apply those skills to predicting the market.

On Wall Street those traders who perform big data analytics are called ‘quants’.

The impacts on Wall Street have been documented in popular books such as Nicolas Nassim Taleb’s ‘Fooled by Randomness’ and ‘The Black Swan’ and Scott Patterson’s ‘The Quants: How a New Breed of Math Whizzes Conquered Wall Street and Nearly Destroyed It’.

We are well into the ‘Rise of the Quants’ in healthcare. As it was on Wall Street back in the day, it is no surprise in any large payer or provider to meet PhD data scientists whose primary expertise might be something like population biology, but whose analytics skills gained in pursuit of that expertise have been honed to a sharp edge which they are now using to carve up health data.

The application of advanced analytics generally starts with building models designed to achieve particular results.

Data scientists from other fields have practical and theoretic model building skills, but they have been applying them in their own field of study. Building models for a new domain, such as health care services or provider contracting, requires a developing a deep understanding of the problem domain.

But not all of the existing resources in a business area have the kind of conceptual acumen needed to assist in the building of such models.

And not all the data scientists have the people skills needed to facilitate such model building.

So it is likely we need a third role – expert analytics facilitators.

There is also a culture clash coming. Many experienced businesspeople are accustomed to making decisions on their own using shallow analytics, experience and intuition.

Remember the scene from Moneyball (the wonderful movie for which I am providing free advertising) where Billy Bean (played by Brad Pitt) is surrounded by old school baseball scouts, deeply experienced in evaluating baseball players.

They are bewildered and offended when Beane turns to his quant, played Jonah Hill, to make decisions based on statistical analysis.

It is even more problematic when Beane tries to dictate operations – who should be played – to the team’s manager Art Howe, played by the late Phillip Seymour Hoffman.

That scene will play out over and over in healthcare as we operationalize analytics.

Creating an Analytics Culture

To minimize its impact, and to speed its integration, you should strive to create a culture of analytics.

Dr. Hossein Arsham, Wright Distinguished Research Professor at the Merrick School of Business in Baltimore, argues that analytics skill has to be part of the fundamental toolkit of any business manager:

“Almost all managerial decisions are based on forecasts. Every decision becomes operational at some point in the future, so it should be based on forecasts of future conditions.”

You must create a culture in which those skills are present and valued.

First, the effective application and integration of analytics demands a common statistical vocabulary. All techniques for deriving value from data depend on distinguishing patterns in data from randomness. Those distinctions are established by statistical means.

So you must establish a statistically conversant culture. Here are some steps you can take to drive that:

- Add statistical awareness and skills as hiring criteria for managers and above.

- Add demonstrable statistical awareness to the evaluation criteria of managers and above.

- Create professional development courses to provide basic statistical knowledge.

Second, your managers, directors and vice presidents all use business productivity tools that have analytics capabilities built in. For example, most of them have created trend lines in Excel at one time or another.

But how many know what that R-squared value you can display means? And how many know when to use which kind of trend line?

Many of your managers are also SQL jockeys. But how many of them know that Oracle SQL has a one-way analysis of variance (ANOVA) built in?

Or on a more advanced level that some databases (such as Greenplum) have Naïve Bayesian Classifiers built in?

Teaching desktop analytics to all the managers and above who are interested will provide immediate value to the company – it will help them make more informed decisions. You should create a Center of Excellence to support them.

The expanded use of desktop analytics will also stoke a hunger for larger scale data driven analytics. If they are doing trend lines in Excel, they will find cases where Excel won’t work because the data is too big, or too sophisticated. They will want more, more data, more power, more speed.

Your rollout of desktop BI technology will succeed because it will satisfy that hunger.

And you will have created a culture of analytics.

The Relentless Reduction of Redundancy

The increasing perfection of information will reduce operational redundancy both internally and across your strategic partners. Business decision contexts will come to the fore. Information plumbing will become increasingly automated.

The result will be that the work each group does, that each team does, will get distilled down to its essence. You will have fewer people, but they will all be advanced experts in what they do.

Every team, every hierarchy, needs to figure out what that is. A trenchant business decision context analysis will reveal the true nature of their value.

The same thing will be happening across your trading partners. A number of near-term expedients will give way as new solutions are rapidly developed that enable the orchestration of experts who are empowered to focus on what they do best.

It seems obvious that population health management is not what most providers do any more than population biology is what most biologists do. Individual providers provide individual care. Just as obvious is that population health management is not what health insurers do. They help pay for healthcare. Population health management is obviously a community function – it is what we as a body of people want and need to do for the collective good. That collective good spans doctors, and spans health plans, within a region and across the nation, and requires a bureaucracy to manage. Do we really think forcing providers to create new bureaucracy is going to ultimately reduce health care costs?

Health care is not what health insurers do. We do utilization management to control costs, and welcome the beneficial side effect that lowering costs leads to better health. In an ideal world health payers should be able to play their appropriate role of cost containment without shame or rationalizing cost containment as care.

But in our world today, at the cusp of more perfect information, there is long-existing redundancy in place. All payers have health care services.

And there are efforts such as ACOs, doomed to be short-lived – or as short-lived as such misguided large-scale efforts can be – which are tacking across the lake toward the future, not running with the prevailing wind. (I understand my opinion of ACOs puts me in a tiny minority these days. I believe a paradigm shift in health insurance is coming which will make them moot. More about that in a future post.)

The next decade will have a confusing plot, as each health care actor plays different roles at different times. But it will be beneficial for us all to broaden our perspectives and realize that, in the long term, the increasing perfection of information will enable us as a nation to systematically eliminate redundancy and optimize overall value by having each actor do what they do best.

Olivier was charming as Charles, The Prince Regent starring alongside Marilyn Monroe in ‘The Prince and the Showgirl’.

But if we were choosing how best to spend that valuable resource – Olivier – we would have him focus on what he does best.

(If Olivier is not ringing any bells for you, think Meryl Streep in “Mamma Mia” versus in “Sophie’s Choice”. Streep not ringing any bells? Try Amy Adams in “The Wedding Date” versus “American Hustle.” Still nothing? I’m out.)

The Organizational Structure is Forced to Evolve

Fueled in part by technological advancement, the pace of business change continues to increase. It used to be the case that the key challenge in a large-scale IT project, such as rolling out a new monolithic application such as Salesforce, was getting the business requirements correct. We all spent a lot of time up front on requirements gathering. But we did not always get it right. Agile emerged in large part as a response to the inevitable failure of big up front requirements and design. But what we are seeing now in IT is that we are not simply missing requirements at the beginning. Driven by the pace of business, and our expert understanding of its evolution, the requirements are actually different two months in, very different six months in, and unrecognizably different a year in.

What we need to start delivering is atomic functionality that can be quickly created, dynamically integrated, broken apart, and reassembled into new configurations.

Fortunately there is a technological convergence to enable this.

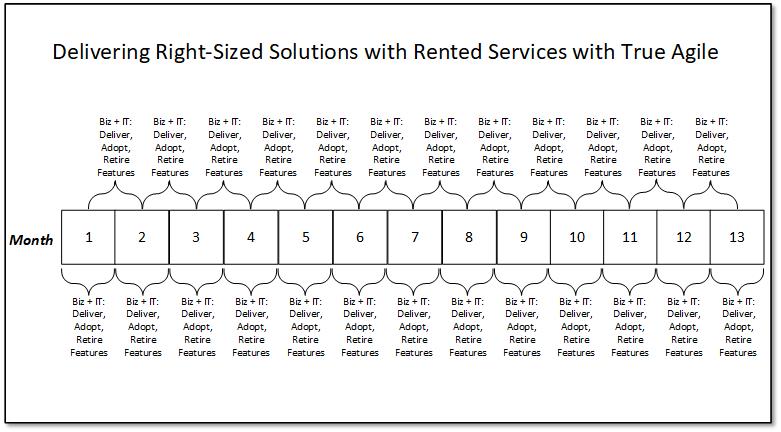

As we’ve seen, newly realized rapid development technologies based on APIs and the Cloud will result in markedly accelerated times to market. The advent of right-sized solutions will reduce the size of each deliverable. The adoption of agent-driven agile from our increased consumer focus will make each sprint more efficient. The focus on business decision contexts will enable clear, small, discrete scopes.

As a result the organizational relationship between the business and IT will be forced to evolve as the seven month, nine month, year and a half delivery times of yore : ) give way to the cadenced delivery of new and improved functionality in a sprint over sprint timeframe.

This represents a radical change.

Many large health care enterprises started as ‘Build It’ shops, which designed and coded their own mainframe-based applications. (There is never actually only one way of delivery new functionality – the distinction I am drawing here describes the dominant method of delivering vital operational functionality, and is more thematic than accurate, gritty detail, so just roll with me, please.) These took years to develop, so had to be kept around nigh-on forever to justify the cost. These massive monoliths were the operational hearts of healthcare enterprises.

Then big healthcare IT evolved into ‘Buy It’ shops, where they bought and integrated third party technologies, such as Epic and Cerner and Facets and Salesforce. The evolution from Build It to Buy It marginally improved delivery times, and enabled shorter lifespans for solutions. These solutions still needed massive integration projects to bring them online. The introduction of Agile – usually wrapped by Waterfall, which was needed both to transition to Agile successfully as well as integrate Agile with existing project management and budget approaches, did not change the overall approach from a high level. These were – are – frequently kept around for lifespans of five or more years.

That model must change. The pace of business moving into the 2020’s demands that the generations of our evolution be measured in weeks, not years. Enter a third option. To ‘Buy’ and ‘Build,’ as we more to Everything-as-a-Service we can add ‘Rent.’ Here is what that high-level picture looks as we move from Build to Buy to Rent.

The new and changed tools delivered will not require big rollouts or extensive training times any more than the new version of PewDiePie: Legend of the Brofist[1]

Overall efficiency will demand the complete elimination of silos. There will no longer be ‘business’ and ‘IT’ as discrete operational entities. We will simply have agile teams which own business decision contexts and their support staffed with business, IT and analytics experts who are making continuous improvements to their tools and processes sprint over sprint.

As we move to more direct business control of logic through increasing configurability and the introduction of rules engines, it will be very useful to have trained logicians – all programmers are necessarily logicians – closely engaged.

The impact of the fine-grained integration of IT and the business will be bigger on the business than on IT. Tool and process development will no longer happen in parallel to the day-to-day, then force big changes once or twice a year. IT here has already moved strongly to the adoption of Agile. The business needs to go all in as well.

If we are all going to stay competitive in the rapidly changing – the accelerating – market we are faced with, we need to be able to respond in weeks, not months or years.

The sooner we move aggressively in that direction the sooner we will realize the benefits.

The Changing Nature of Business Expertise

The nature of business expertise will necessarily evolve as well. With greater automation, individuals won’t be able to ‘learn the ropes’ by experience moving up from simpler decisions to more complex ones – the simpler ones will be automated. The new experts will need to be able to leverage data – they need to be expert in finding, analyzing, interpreting data. With respect to the particular knowledge of their domain, there will be a greater reliance on the structured passing of knowledge, such as gained from formal education, certifications, guilds, and trade associations.

Business Requirements Redux

As I mentioned above, one of the biggest challenges we have had in the effective design and delivery of information solutions has been in getting a handle on the business requirements. Despite IT’s adoption of Agile, we have been forced to have an upfront capture and articulation of requirements in the waterfall ‘wrapper’ we have around Agile. That is in part because our Agile process itself is primarily used as a way of partitioning work and assigning it to resources. It must be that, but to succeed it must also be the requirements mechanism – that is built into Agile’s bones.

Until we reset on Agile we will not be able to achieve the consistent, accelerated delivery cadence the rapidly changing market will demand.

There are a number of reasons we are struggling with Agile.

One is our approach to Agile stories. This is not our problem alone – it is a function of the adoption of Agile for back-office IT.

Agile was designed for creating applications. Agile was not designed for integration or aggregation projects. So its adoption in the back office has been problematic everywhere, not just for us.

I speak to this at length in one of my recent posts. The bottom line is that we fundamentally need to write stories as the achievement of human users’ intentions – system users are their proxies.

Another challenge is the grain and frequency of business engagement. As I described above, I believe we are headed toward the inevitable integration of business and IT. One of the reasons we all struggle now with Agile is inconsistent and inadequate business engagement.

A number of the technology themes we’ve discussed will help us up the ramp of better, necessary and inevitable Agile integration.

Having Consumers emerge as the data heart of our operational systems will help us focus on creating stories about enabling those individuals to meet their objectives.

The distillation of processes to defined Business Decision Contexts will help us categorize and define those intentions. We are either creating a Context, triggering a Context, creating a Guard to trigger the next action downstream, or automating the decision in the Context.

Creating a tool environment of well-orchestrated right-sized solutions will enable the sprint-over-sprint delivery cadence Agile is built to achieve. A common back-office Agile problem is that given solution sizes and scopes most sprints do not deliver demonstrable user functionality. That will become easier as the scope of work is consistently smaller.

Adopting a continuous delivery capability across the enterprise will provide the level of quality and automation needed to keep pace.

Bottom line is we can no longer afford to build monolithic solutions. We need to build the service and data integration framework to support tiny solutions, and abandon the heavy-requirements-up-front style demanded by monolith development in favor of the agile, continuous deliver approach needed to build right-sized solutions.

Next up, the strategic business impacts of our five technology themes.

Stay tuned.

[1] “PewDiePie: Legend of the Brofist” is a smartphone game. If you have been living off the grid with no Internet for the last decade, PewDiePie is the online alias of a controversial Swedish comedian and producer named Felix Arvid Elf Kjellberg who has one of the most-subscribed YouTube channels of all time – over 10 billion with a ‘B’ views. To put that in perspective, if we back-of-the-envelope the total viewership of the five ‘Twilight’ movies using a little Wikipedia data, with $3.5B in box office at call it $10 a ticket plus around $36M in DVD sales, triple that arbitrarily for streaming, we are still talking 5% – five percent! – of the viewership of PewDiePie. He has also become an influential game critic, and the PewDiePie game is a kind of homage.